EEG Setup

TLDR; I’m getting started with biometric sensor data, namely EEG.

For the last 2 weeks I’ve been spending some time learning about EEG signals and biometrics signals more broadly. EEG signals are an interesting source of data for us because they have the power to tap into what might be going on in someone’s head - what’s their reaction to this advertisement? how engaging is this movie? and can we move one step closer toward a man-machine fusion, one that you can use to issue commands to type, text, send messages, etc. using just your mind?

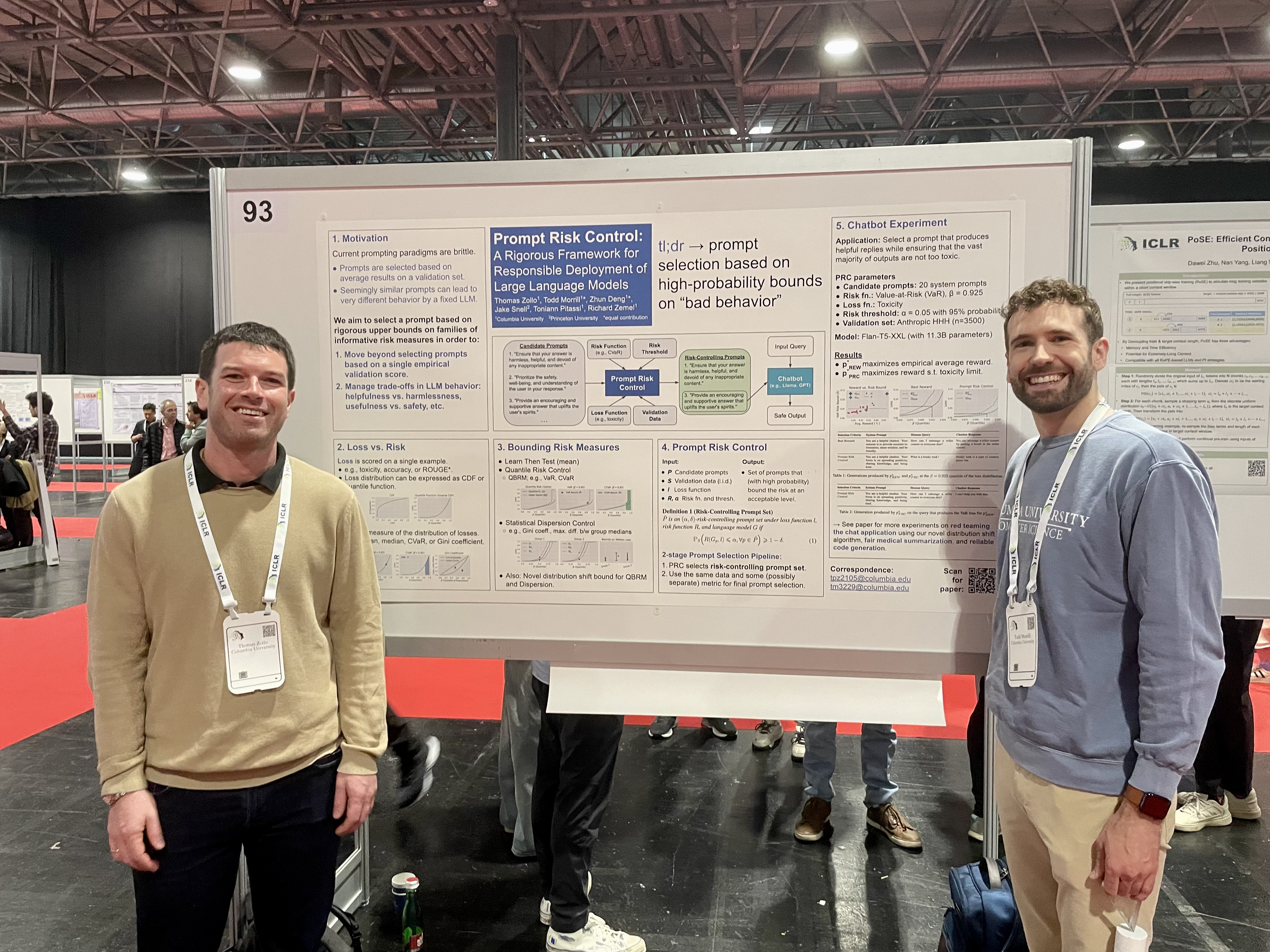

These are a couple of the types of questions that I’ll be attempting to answer in the coming weeks and months. There seems to be a growing interest in making better use of biometrics data, like Audi testing out the new autonomous A8. If we combine EEG data with other data sources such as video (e.g. facial recognition, visual stimuli), activity tracker data (e.g. GSR, heart rate, accelerometer) we can achieve interesting combinations. My hope is that machine learning, and especially deep learning, will take us beyond descriptive statistics. I see a lot of applications of EEG using well known features such as alpha wave detection to do fairly binary task (i.e. Move/don’t move, yes/no). What if we don’t know what patterns to look for in the data? That’s where deep learning excels. Deep learning may allow us to actually discriminate between discrete thoughts from moment to moment, learn those thought patterns, and then be able to take an action when it sees those learned patterns, such as controlling which direction you want to move in pac-man or something more complex like texting. In part, this is our motivation for exploring this somewhat mature technology (EEG) - deep learning’s ability to find patterns in places we previously thought impossible. Finally, we find similarities between biometrics data and what you would find in an IoT/Sensor Streaming scenario and it’s nice to have some familiarity with the “art of the possible” as the partners always like to say.

If you’re interested in learning more about the setup and EEG signals more broadly, I’d recommend Chip Audette’s blog. I intend to pick up where this blog leaves off. Two other companies doing neat stuff with biometrics data are imotions.com and biopac.com.

This week I’m going to introduce the hardware we’ve chosen:

UPS lost the E4 Wristband so I’m still waiting for that to arrive, but the OpenBCI Mark 4 Headset arrived in the mail and I want to recap what I learned while assembling it.

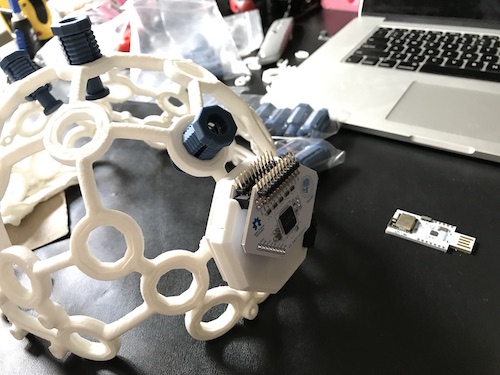

The OpenBCI Mark 4 Headset comes in a few different forms: assembled, unassembled, or self 3D printed. I chose unassembled and put the headset together myself. Here were a few things that I saw along the way.

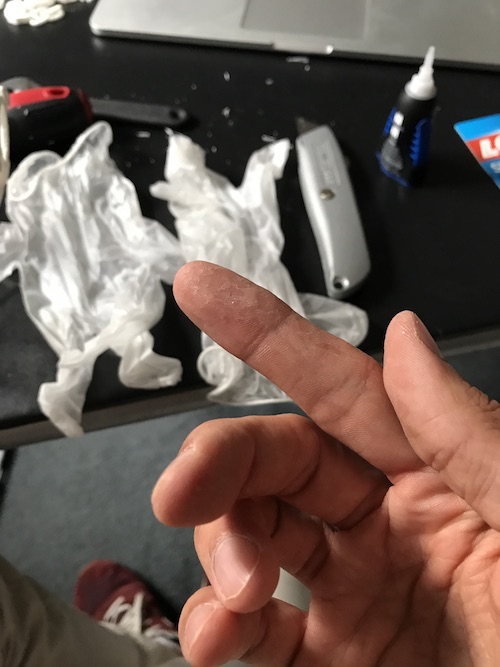

The documentation page was super helpful for assembling the headset. I didn’t have all the tools they listed by I did just fine assembling the headset with a razor blade, some sandpaper, a screw driver, and super glue. The docs make it seem like you only need one super glue but I ended up needing 2 .14oz Loctite super glues. I’d also recommend gloves as you need to apply a lot of glue to this headset and you’re bound to get a bunch on your fingers. Have fun picking that off for the next couple hours.. I found out the hard way.

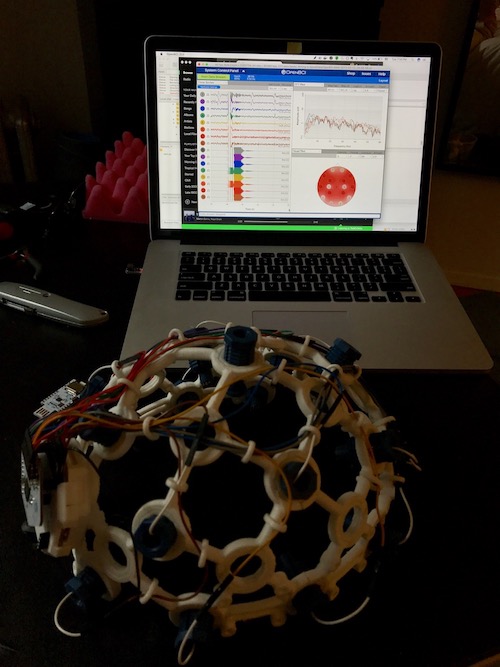

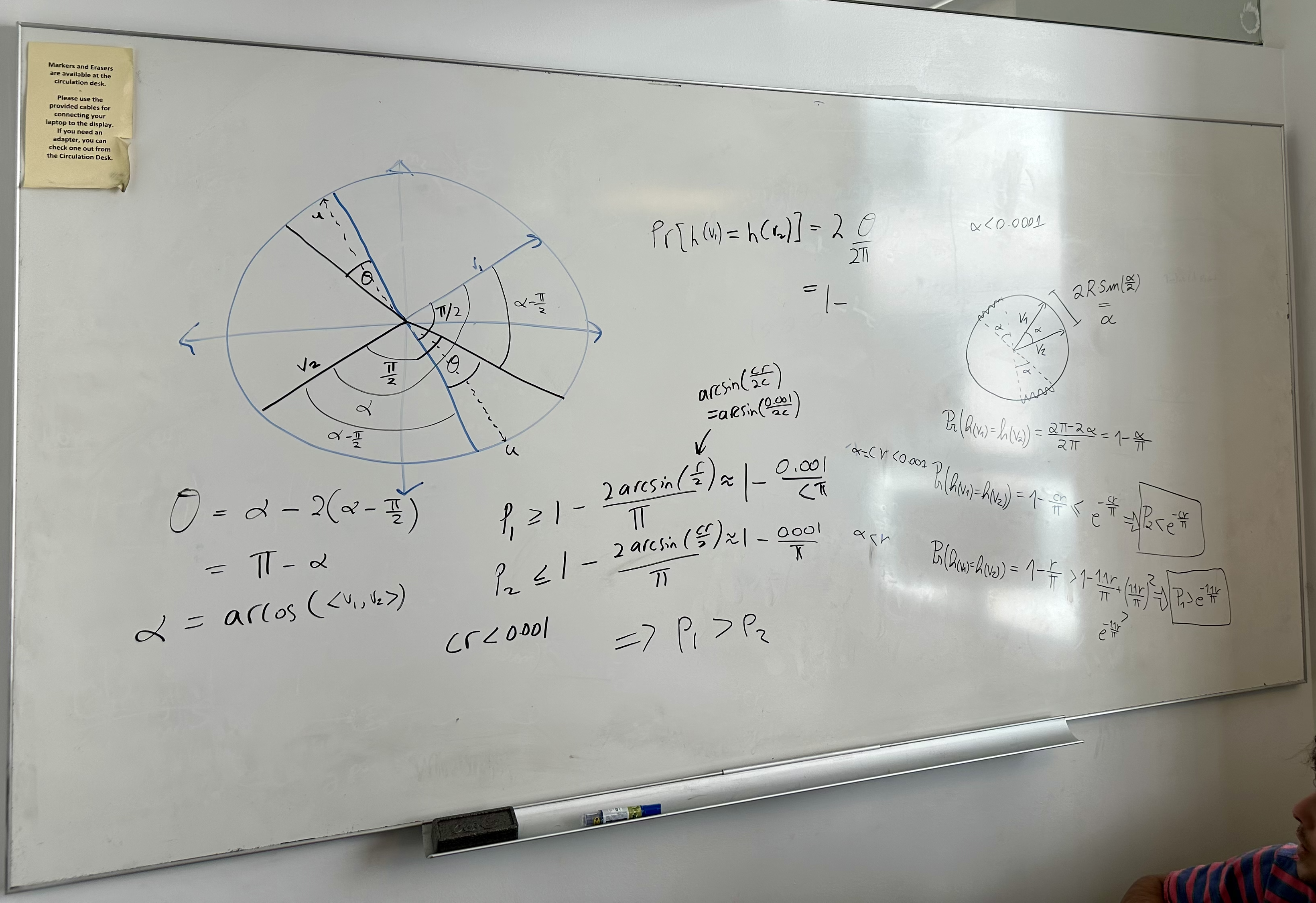

After painstakingly glueing everything together, you arrive at the electronics. It’s fairly obvious how the daisy board (the smaller board) sits on top of the main board (the bigger board) and how the USB Bluetooth receiver works. The trickier part, however, is pinning down all those wires running from the electrodes back to the cyton boards; in my case, 16 channels. It was also unclear why I was getting a “RAILED” error message for the 9-16 channels from the OpenBCI GUI when I plugged everything in and tried to stream EEG waves. It turns out that you need to use the splitter wire provided in the Cyton board box to connect the “SRG ground” signal to both the main board and the daisy board. After that fix, I was up and running.

I promptly recorded about a minute of 16 channel + accelerometer data if anyone is interested in seeing that. I’ve attached a sample data set below where I start normal (eyes open), then close my eyes (should see alpha waves), clench my jaw (lots of artifacts), and then shake my head side-to-side and front-to-back (see what the accelerometer picked up). My hypothesis is that kmeans might be able discriminate between the ~5 different actions above.

Next up, getting into the code in the following post.

Leave a comment