KubeCon 2019

TLDR; I recently attended KubeCon, where I learned about how Kubernetes is being used for machine learning model development.

Here are my high-level takeaways from the experience (2-minute read).

- Very dev-centric and open source community - this was my first “developer” conference and as such the talks were fantastic. Every talk that I attended got to the crux of the technology, whether it was managing the state of your K8s application or the control-flow semantics of gRPC.

- The ecosystem is fragmented and many parts are at version < 1.0. There are many new startups and projects focusing on one narrow piece of Kubernetes infrastructure. For example, it seemed like there were several Message Queue startups/projects (e.g. NATS vs. KubeMQ) all doing the same thing. Well-resourced tech firms (e.g. Lyft, Snap, etc.) are creating workarounds for basic things (e.g. Snap customizing Kubeflow for artifact caching - brilliant!). These projects will likely to consolidate and mature rapidly within the next 1-2 years. Stay tuned but tread lightly lest you invest a lot of time and energy into something that goes bust in the next 6 months.

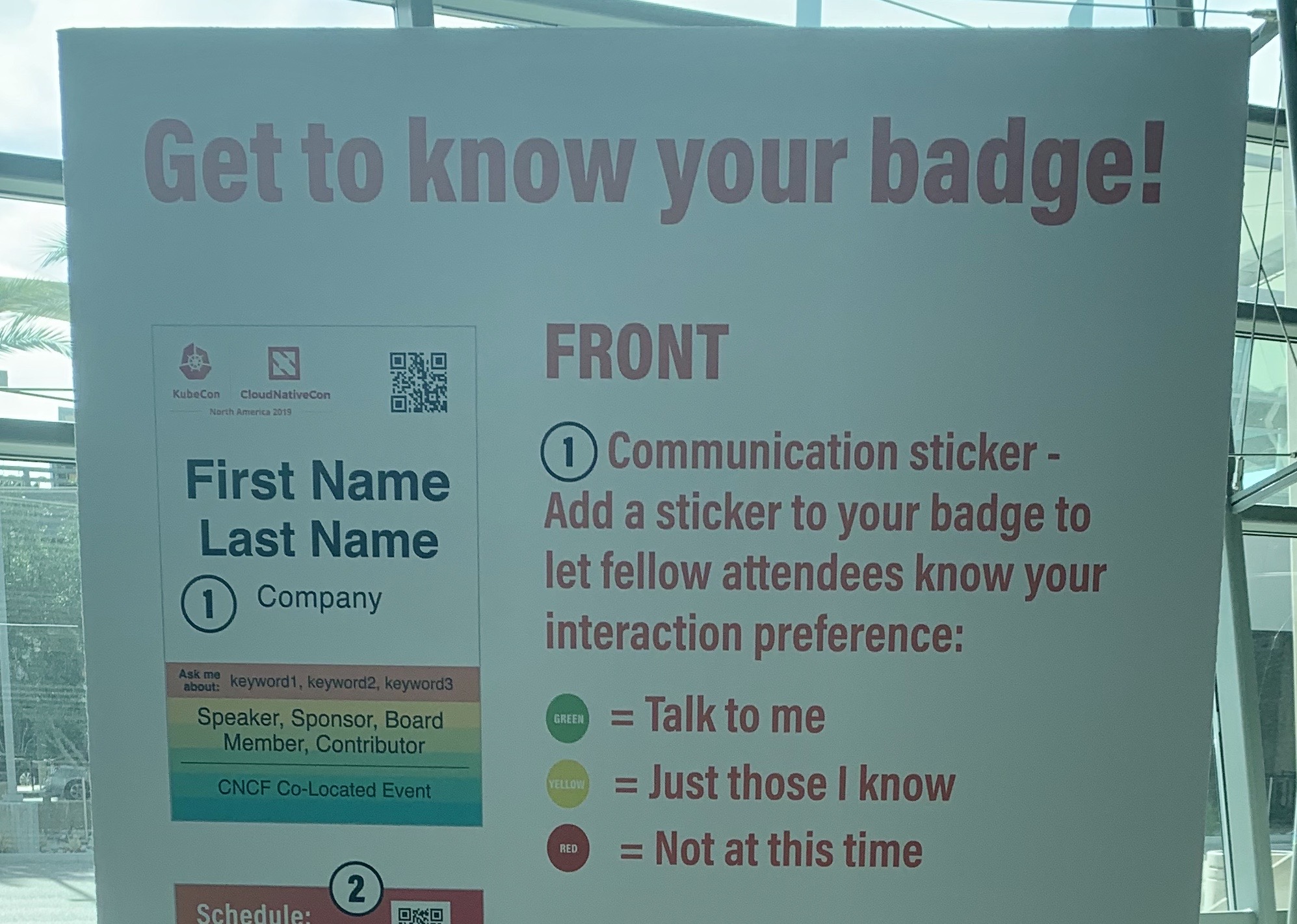

- It was a very welcoming environment with a heavy emphasis on attendee comfort. The conference offered group runs, yoga, a quiet room, a new initiative called Open Sourcing Mental Illness, and a puppy paw-looza (therapy dogs came to the event one night). Another interesting experiment (albeit not-so successful) was to issue red, yellow, and green stickers to express your social interaction preferences (see photo above). Green was “Talk to me”, yellow was “Just those that I know”, and red was “Not at this time”. I didn’t see any red stickers out there but it gives you a sense of the lengths the organizers went to accommodate everyone.

- There wasn’t much content in the AI/ML/data analytics space beyond Kubeflow (the 800 pound Gorilla in the room). There are two ways of looking at this:

- the organizers could have done a better job bringing in more diverse presenters to cover topics like Spark or Dask on Kubernetes.

- we should be really grateful to the community for giving Kubeflow so much attention and being so dedicated to its success.

I still would have liked to see a few more hands-on sessions on some sample workflows (e.g. distributed training job, hyperparameter tuning job, etc.).

- Minikube is a great place to start if you want to experiment with Kubernetes on your laptop! I still need to dig into MicroK8s’ strengths and weaknesses as well.

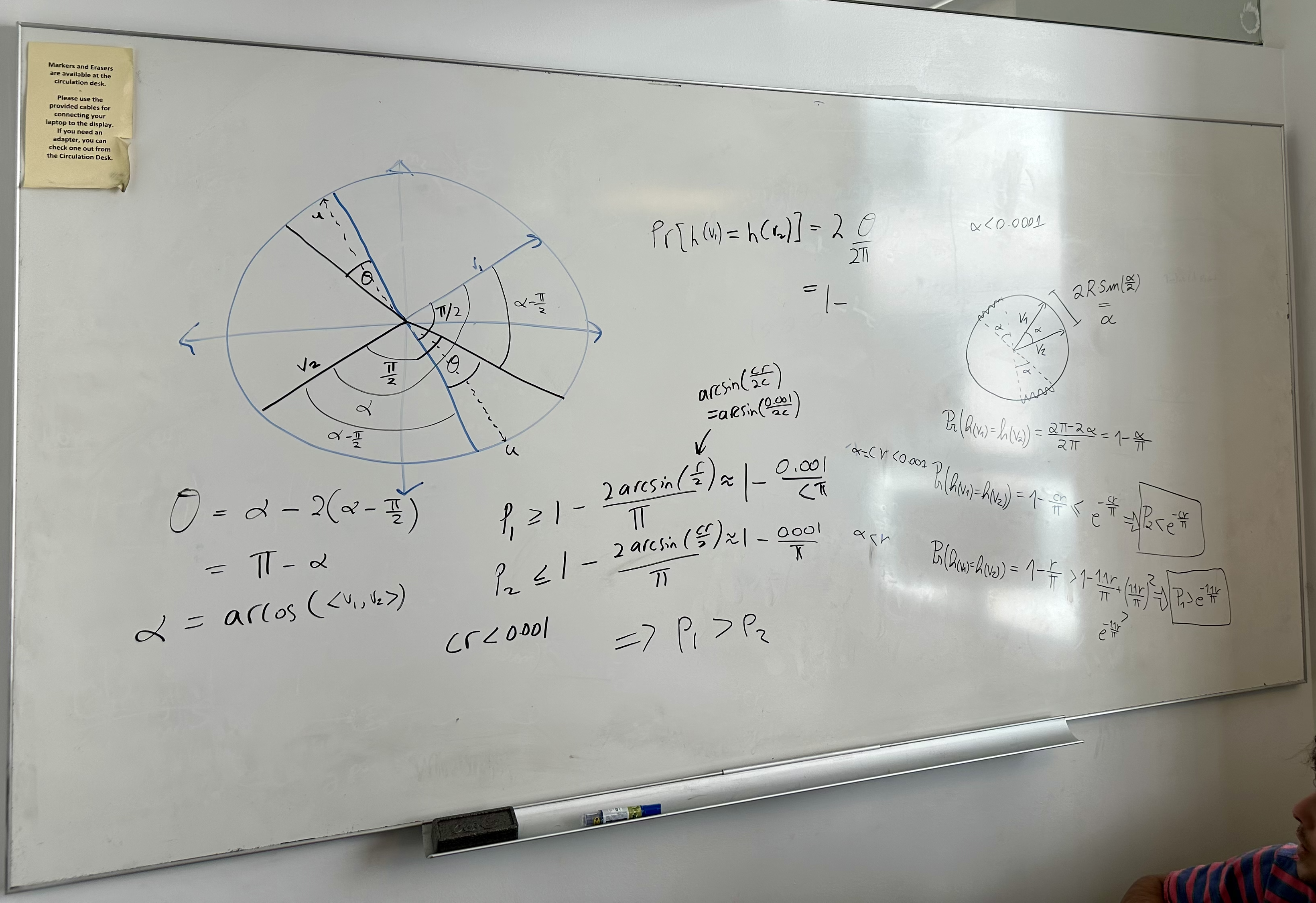

- The one talk that really stood out to me was called Realizing End to End Reproducible Machine Learning on Kubernetes by the phenomenally talented engineer, Suneeta Mall from Nearmap. It’s worth the watch if you’ve ever been curious about determinism in Deep Learning systems.

There you have it - a recap of KubeCon in ~2 minutes! If you’re interested in exploring some of my conference follow-ups check them out below.

Follow-ups

Here were the really cool projects and articles that I intend to follow-up on over Thanksgiving Break.

- Wait, what the heck is K8s again? Refresher!

- Schedule (tip: filter the schedule for Machine Learning + Data talks) and all recorded presentations

- KFServing - ML model serving on Kubernetes

- Seldon Alibi - anchors, counterfactuals, contrastive explanations, trust scores

- AIX360 - to be integrated in the near future

- Kale - convert Jupyter Notebooks to Kubeflow Pipelines

- Kale on GitHub

- Feast - Feature Store for Machine Learning

- Not related to K8s, just nerdy stuff: Determinism (or lack thereof) in Deep Learning

What do you think? Leave a comment below.

Leave a comment